- Install apache spark windows without admin rights .exe#

- Install apache spark windows without admin rights code#

But in most case, if you are running Spark on Windows it’s just for an analyst or a small team which share the same rights. That wouldn’t be a great idea for a big Spark cluster with many users. Indeed, we are basically bypassing most of the right management at the filesystem level by removing winutils.exe.

myproject/ manage.py myproject/ init.py settings.py urls.py wsgi.py.

Remember, when we created our project and we looked at the project structure, it looked like. So the first thing is to make sure you have Apache and modwsgi installed. That’s all nice and well but doesn’t winutils.exe fulfill an important role, especially as we are touching something inside a package called security? Serving Django applications via Apache is done by using modwsgi. It is based on hadoop 2.6.5 which is currently used by Spark 2.4.0 package on mvnrepository. While I might have missed some use cases, I tested the fix with Hive and Thrift and everything worked well. # Hadoop complaining we don't have winutils.exe In order to avoid useless message in your console log you can disable logging for some Hadoop classes by adding those lines below in you log4j.properties (or whatever you are using for log management) like it’s done in the seed program. I basically avoid locating or calling winutils.exe and return a dummy value when needed. Both Hopsworks Cloud Installer and Hopsworks. A Hopsworks installation is performed using a cluster definition in a YML file. The modifications themselves are quite minimal. A custom installation of Hopsworks allows you to change the set of installed services, configure resources for services, configure username/passwords, change installation directories, and so on. Basically I just override 3 files from hadoop : For tarball installation: Cassandra/bin/cqlsh publicipofyournode 9042 -u yourusername -p yourpassword. Note: if authentication is not enabled in your cluster, you don’t need the options -u and -p. I made a Github repo with a seed for a Spark / Scala program. Open your terminal and use the following command to connect to your cluster. Everything is open source so the solution just laid in front of me : hacking Hadoop. Spark Hadoop Distributed File System (HDFS) Hive.

Install apache spark windows without admin rights code#

The Hadoop/Spark project template includes sample code to connect to the following resources, with and without Kerberos authentication. Obviously, I’m obsessed with results and not so much with issues. If your Anaconda Enterprise Administrator has configured Livy server for Hadoop and Spark access, you’ll be able to access them within the platform.

Install apache spark windows without admin rights .exe#

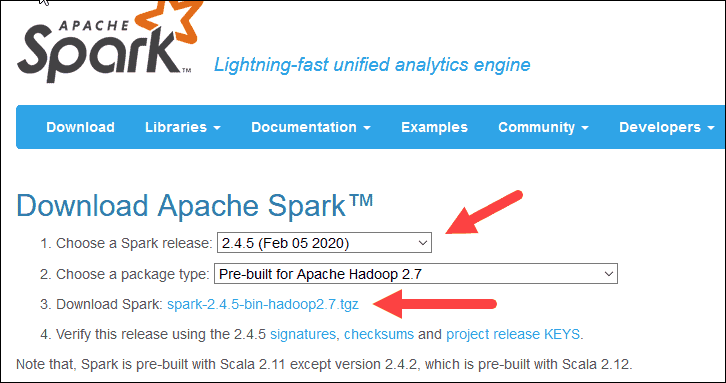

exe will provoke an unsustainable delay (many months) for security reasons (time to have political leverage for a security team to probe the code). That feel a bit odd but it’s fine … until you need to run it on a system where adding a. Nevertheless, while the Java motto is “Write once, run anywhere” it doesn’t really apply to Apache Spark which depend on adding an executable winutils.exe to run on Windows ( learn more here). I’m playing with Apache Spark seriously for about a year now and it’s a wonderful piece of software.

0 kommentar(er)

0 kommentar(er)